【昇腾】多卡分布式训练-官方例程

world_size(进程总数):在分布式训练或并行计算任务中,world_size表示总共有多少个进程参与计算。每个进程通常运行在不同的CPU核心、GPU或整个计算节点上。4.将数据加载器train_dataloader与train_sampler相结合。Shell 脚本可以自动化任务,使得重复性的工作可以快速、一致地完成。2.在获取训练数据集后,设置train_sampler。3.定义模型后,

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ netstat -tulnp | grep 29500

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ netstat -tulnp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.234.91.164:8888 0.0.0.0:* LISTEN 367/python

tcp6 0 0 10.234.91.164:2222 :::* LISTEN 210/java

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ netstat -tulnp | grep 8888

tcp 0 0 10.234.91.164:8888 0.0.0.0:* LISTEN 367/python

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ netstat -tulnp | grep 2222

tcp6 0 0 10.234.91.164:2222 :::* LISTEN 210/java

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ netstat -tulnp | grep localhost

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ netstat -tulnp | grep 65536

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ netstat -tulnp | grep 'localhost'

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$

01 单卡训练

配置环境变量后,执行单卡训练脚本拉起训练,命令示例如下(以下参数为举例,用户可根据实际情况自行改动):

python3 main.py --batch-size 128 \ # 训练批次大小,请尽量设置为处理器核数的倍数以更好的发挥性能

--data_path /home/data/resnet50/imagenet # 数据集路径

--lr 0.1 \ # 学习率

--epochs 90 \ # 训练迭代轮数

--arch resnet50 \ # 模型架构

--world-size 1 \

--rank 0 \

--workers 40 \ # 加载数据进程数

--momentum 0.9 \ # 动量

--weight-decay 1e-4 \ # 权重衰减

--gpu 0 # device号, 这里参数名称仍为gpu, 但迁移完成后实际训练设备已在代码中定义为npu

02 多卡分布式训练

https://www.hiascend.com/document/detail/zh/Pytorch/60RC2/ptmoddevg/trainingmigrguide/PT_LMTMOG_0080.html

多卡训练分为单机多卡训练与多机多卡训练,二者均需要将单机训练脚本修改为多机训练脚本,配置流程如下。

(1)单机多卡训练

- 先参考单卡脚本修改为多卡脚本章节

- 再参考拉起多卡分布式训练章节,选择拉起合适的方式,进行必要的修改后,执行对应的拉起命令。

单卡脚本修改为多卡脚本

1.在主函数中添加如下代码。

local_rank = int(os.environ["LOCAL_RANK"])

device = torch.device('npu', local_rank)

torch.distributed.init_process_group(backend="hccl",rank=local_rank)

2.在获取训练数据集后,设置train_sampler

train_sampler = torch.utils.data.distributed.DistributedSampler(train_data)

3.定义模型后,开启DDP模式

model = torch.nn.parallel.DistributedDataParallel(model, device_ids=[local_rank], output_device=local_rank)

4.将数据加载器train_dataloader与train_sampler相结合。

train_dataloader = DataLoader(dataset = train_data, batch_size=batch_size, sampler = train_sampler)

拉起多卡分布式训练

在单机和多机场景下,有5种方式可拉起分布式训练:

- shell脚本方式(推荐)

- mp.spawn方式

- Python方式

- torchrun方式:仅在PyTorch 1.11.0及以上版本支持使用。

- torch_npu_run方式(集群场景推荐):此方式是torchrun在大集群场景的改进版,提升集群建链性能,仅在PyTorch 1.11.0版本支持使用。

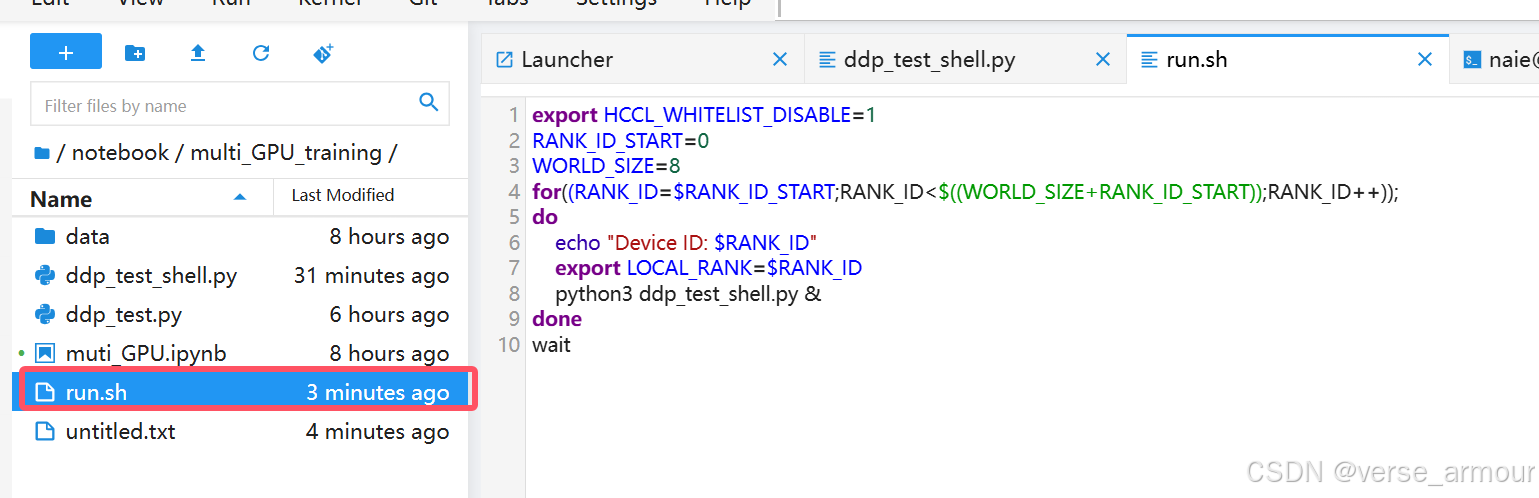

1.shell脚本方式

Shell 脚本是一种脚本语言,它允许用户编写一系列的命令,这些命令可以被 Shell 执行。Shell 脚本可以自动化任务,使得重复性的工作可以快速、一致地完成。

export HCCL_WHITELIST_DISABLE=1

RANK_ID_START=0

WORLD_SIZE=8

for((RANK_ID=$RANK_ID_START;RANK_ID<$((WORLD_SIZE+RANK_ID_START));RANK_ID++));

do

echo "Device ID: $RANK_ID"

export LOCAL_RANK=$RANK_ID

python3 ddp_test_shell.py &

done

wait

注意:这里8卡训练则设置WORLD_SIZE=8,如果只有单卡训练则,WORLD_SIZE=1.

world_size(进程总数):在分布式训练或并行计算任务中,world_size表示总共有多少个进程参与计算。每个进程通常运行在不同的CPU核心、GPU或整个计算节点上。

- 将以上代码保存到一个sh文件中。

- 给予执行权限:

chmod +x run.sh - 运行该脚本:

./run.sh

2.torchrun方式

export HCCL_WHITELIST_DISABLE=1

torchrun --standalone --nnodes=1 --nproc_per_node=8 ddp_test_shell.py

3.python方式

# master_addr和master_port参数需用户根据实际情况设置

export HCCL_WHITELIST_DISABLE=1

python3 -m torch.distributed.launch --nproc_per_node 8 --master_addr localhost --master_port *** ddp_test.py

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ python3 -m torch.distributed.launch --nproc_per_node 1 --master_addr localhost --master_port "29500" ddp_test.py

/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py:181: FutureWarning: The module torch.distributed.launch is deprecated

and will be removed in future. Use torchrun.

Note that --use-env is set by default in torchrun.

If your script expects `--local-rank` argument to be set, please

change it to read from `os.environ['LOCAL_RANK']` instead. See

https://pytorch.org/docs/stable/distributed.html#launch-utility for

further instructions

warnings.warn(

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=0

[2024-10-28 23:26:37,024] torch.distributed.elastic.multiprocessing.api: [ERROR] failed (exitcode: 2) local_rank: 0 (pid: 24010) of binary: /usr1/python/python/target/checkout/package/python/python-24.10.217/bin/python3

Traceback (most recent call last):

File "/usr1/build/lib/python3.9/runpy.py", line 197, in _run_module_as_main

File "/usr1/build/lib/python3.9/runpy.py", line 87, in _run_code

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 196, in <module>

main()

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 192, in main

launch(args)

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 177, in launch

run(args)

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/run.py", line 797, in run

elastic_launch(

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 264, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

============================================================

ddp_test.py FAILED

------------------------------------------------------------

Failures:

<NO_OTHER_FAILURES>

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2024-10-28_23:26:37

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 0 (local_rank: 0)

exitcode : 2 (pid: 24010)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

============================================================

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ ./run_python.sh

sh: ./run_python.sh: Permission denied

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ chmod +x run_python.sh

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ ./run_python.sh

/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py:181: FutureWarning: The module torch.distributed.launch is deprecated

and will be removed in future. Use torchrun.

Note that --use-env is set by default in torchrun.

If your script expects `--local-rank` argument to be set, please

change it to read from `os.environ['LOCAL_RANK']` instead. See

https://pytorch.org/docs/stable/distributed.html#launch-utility for

further instructions

warnings.warn(

[2024-10-28 23:39:53,660] torch.distributed.run: [WARNING]

[2024-10-28 23:39:53,660] torch.distributed.run: [WARNING] *****************************************

[2024-10-28 23:39:53,660] torch.distributed.run: [WARNING] Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

[2024-10-28 23:39:53,660] torch.distributed.run: [WARNING] *****************************************

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=0

ddp_test.py: error: unrecognized arguments: --local-rank=1

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=3

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=4

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=5

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=6

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=2

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=7

[2024-10-28 23:40:03,752] torch.distributed.elastic.multiprocessing.api: [ERROR] failed (exitcode: 2) local_rank: 0 (pid: 24696) of binary: /usr1/python/python/target/checkout/package/python/python-24.10.217/bin/python3

Traceback (most recent call last):

File "/usr1/build/lib/python3.9/runpy.py", line 197, in _run_module_as_main

File "/usr1/build/lib/python3.9/runpy.py", line 87, in _run_code

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 196, in <module>

main()

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 192, in main

launch(args)

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 177, in launch

run(args)

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/run.py", line 797, in run

elastic_launch(

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 264, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

============================================================

ddp_test.py FAILED

------------------------------------------------------------

Failures:

[1]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 1 (local_rank: 1)

exitcode : 2 (pid: 24697)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[2]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 2 (local_rank: 2)

exitcode : 2 (pid: 24698)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[3]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 3 (local_rank: 3)

exitcode : 2 (pid: 24699)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[4]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 4 (local_rank: 4)

exitcode : 2 (pid: 24700)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[5]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 5 (local_rank: 5)

exitcode : 2 (pid: 24701)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[6]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 6 (local_rank: 6)

exitcode : 2 (pid: 24702)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[7]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 7 (local_rank: 7)

exitcode : 2 (pid: 24703)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2024-10-28_23:40:03

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 0 (local_rank: 0)

exitcode : 2 (pid: 24696)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

============================================================

[naie@notebook-npu-bde28a8c-568c5bcb58-mlj9c multi_GPU_training]$ ./run_python.sh

/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py:181: FutureWarning: The module torch.distributed.launch is deprecated

and will be removed in future. Use torchrun.

Note that --use-env is set by default in torchrun.

If your script expects `--local-rank` argument to be set, please

change it to read from `os.environ['LOCAL_RANK']` instead. See

https://pytorch.org/docs/stable/distributed.html#launch-utility for

further instructions

warnings.warn(

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/latest owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

/home/naie/.local/lib/python3.9/site-packages/torch_npu/utils/path_manager.py:79: UserWarning: Warning: The /usr/local/Ascend/ascend-toolkit/8.0.RC2.2/aarch64-linux/ascend_toolkit_install.info owner does not match the current user.

warnings.warn(f"Warning: The {path} owner does not match the current user.")

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU]

ddp_test.py: error: unrecognized arguments: --local-rank=0

[2024-10-28 23:41:28,244] torch.distributed.elastic.multiprocessing.api: [ERROR] failed (exitcode: 2) local_rank: 0 (pid: 24925) of binary: /usr1/python/python/target/checkout/package/python/python-24.10.217/bin/python3

Traceback (most recent call last):

File "/usr1/build/lib/python3.9/runpy.py", line 197, in _run_module_as_main

File "/usr1/build/lib/python3.9/runpy.py", line 87, in _run_code

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 196, in <module>

main()

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 192, in main

launch(args)

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launch.py", line 177, in launch

run(args)

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/run.py", line 797, in run

elastic_launch(

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/home/naie/.local/lib/python3.9/site-packages/torch/distributed/launcher/api.py", line 264, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

============================================================

ddp_test.py FAILED

------------------------------------------------------------

Failures:

<NO_OTHER_FAILURES>

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2024-10-28_23:41:28

host : notebook-npu-bde28a8c-568c5bcb58-mlj9c

rank : 0 (local_rank: 0)

exitcode : 2 (pid: 24925)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

============================================================

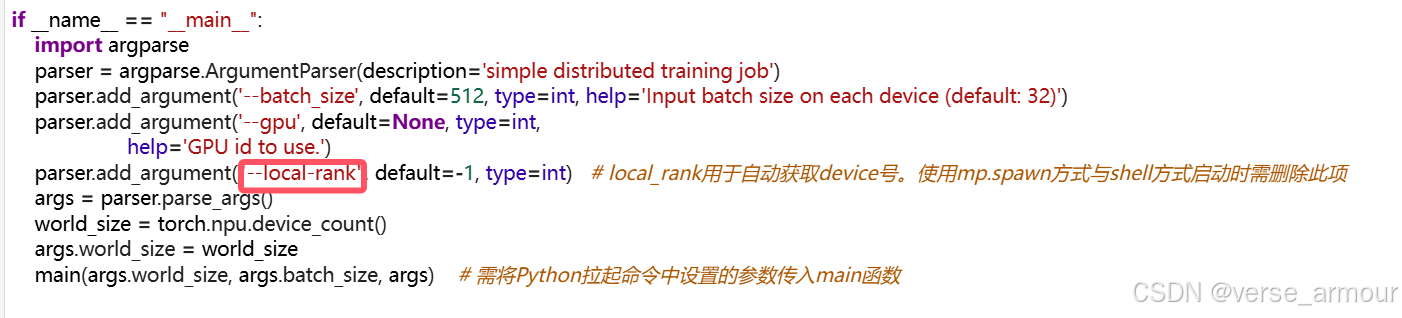

以上python拉起方式一直报错。最终聚焦报错信息中的这句话:

usage: ddp_test.py [-h] [--batch_size BATCH_SIZE] [--gpu GPU] [--local_rank LOCAL_RANK]

ddp_test.py: error: unrecognized arguments: --local-rank=0

观察一下,这里的未识别参数是:local-rank,确实不是local_rank,所以确实这里出了问题,但是我去sh脚本里检查了一圈,我并不知道为什么莫名其妙传进去的是个local-rank,最终没有办法,只能去ddp_test.py脚本里,改成了这样,才可以成功跑通:

(2)多机多卡训练

- 先参考单卡脚本修改为多卡脚本章节,

- 再参考准备多机多卡训练环境进行必要的配置,

- 最后参考拉起多卡分布式训练章节,选择拉起合适的方式,进行必要的修改后,执行对应的拉起命令。

鲲鹏昇腾开发者社区是面向全社会开放的“联接全球计算开发者,聚合华为+生态”的社区,内容涵盖鲲鹏、昇腾资源,帮助开发者快速获取所需的知识、经验、软件、工具、算力,支撑开发者易学、好用、成功,成为核心开发者。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)