【人工智能】华为昇腾NPU-MindIE镜像制作

本文介绍了MindIE 1.0.0的完整安装部署流程:1)安装必备资源包(ATBModels、torch_npu、MindIE);2)配置环境变量;3)修改权限和配置文件,包括模型路径、卡数等关键参数;4)启动服务和测试接口。提供了详细的参数说明文档链接和config.json配置示例。

本文通过不使用官方镜像,自己在910b 进行华为mindie的镜像制作,可离线安装部署。

硬件:cann 8.0

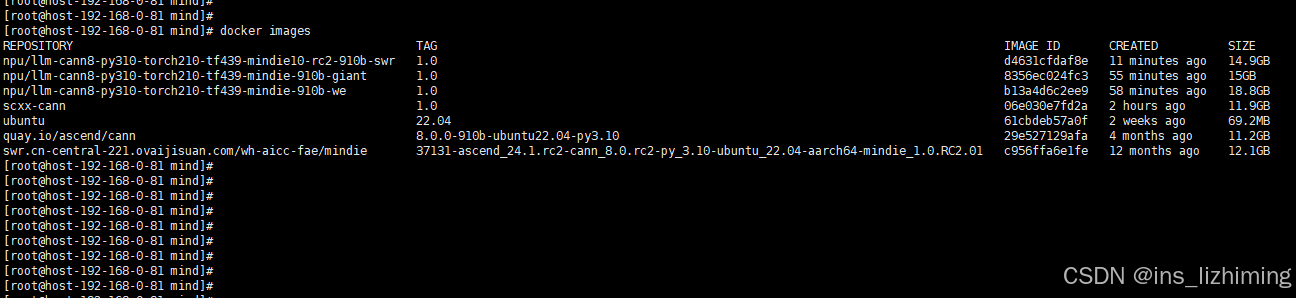

关于docker 镜像:

1. 可自行使用ubuntu 22.04 ,然后安装cann

自行安装cann8.0需要的文件:可自行官方下载,然后按照官方文档进行安装

安装CANN软件包-安装CANN(物理机场景)-软件安装-CANN商用版8.0.0开发文档-昇腾社区

Ascend-cann-kernels-910b_8.0.0_linux-aarch64.run

Ascend-cann-nnal_8.0.0_linux-aarch64.run

Ascend-cann-toolkit_8.0.0_linux-aarch64.run2. 可使用已安装cann的镜像,如quay.io/ascend/cann:8.0.0-910b-ubuntu22.04-py3.10

镜像地址:Quay

这里附上一个Dockerfile 可进行参考

FROM quay.io/ascend/cann:8.0.0-910b-ubuntu22.04-py3.10

ARG PIP_INDEX_URL="https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple"

ARG COMPILE_CUSTOM_KERNELS=1

ENV DEBIAN_FRONTEND=noninteractive

ENV COMPILE_CUSTOM_KERNELS=${COMPILE_CUSTOM_KERNELS}

RUN apt-get update -y && \

apt-get install -y python3-pip git vim wget net-tools gcc g++ cmake libnuma-dev && \

rm -rf /var/cache/apt/* && \

rm -rf /var/lib/apt/lists/*

WORKDIR /workspace

RUN pip config set global.index-url ${PIP_INDEX_URL}

RUN python3 -m pip uninstall -y triton && \

python3 -m pip cache purge

RUN export PIP_EXTRA_INDEX_URL=https://mirrors.huaweicloud.com/ascend/repos/pypi && \

source /usr/local/Ascend/ascend-toolkit/set_env.sh && \

source /usr/local/Ascend/nnal/atb/set_env.sh && \

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/Ascend/ascend-toolkit/latest/`uname -i`-linux/devlib && \

python3 -m pip cache purge

RUN python3 -m pip install modelscope ray && \

python3 -m pip cache purge

CMD ["/bin/bash"]

3. 不想做的也可以使用mindie镜像

镜像地址:镜像仓库网

docker pull swr.cn-central-221.ovaijisuan.com/wh-aicc-fae/mindie:37131-ascend_24.1.rc2-cann_8.0.rc2-py_3.10-ubuntu_22.04-aarch64-mindie_1.0.RC2.011. 部署参考文档:

安装依赖-安装开发环境-MindIE安装指南-MindIE1.0.0开发文档-昇腾社区

2. 参数说明文档:https://www.hiascend.com/document/detail/zh/mindie/100/mindieservice/servicedev/mindie_service0285.html#ZH-CN_TOPIC_0000002186689269__section27371398218

3. 必备资源包:

ATB Models 安装包:Ascend-mindie-atb-models_1.0.0_linux-aarch64_py310_torch2.1.0-abi0.tar.gz

torch_npu 安装包:torch_npu-2.1.0.post10-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

Mindie安装包: Ascend-mindie_1.0.0_linux-aarch64.runrequirements.txt 文件包。然后安装 pip install -r requirements.txt

gevent==22.10.2

python-rapidjson>=1.6

geventhttpclient==2.0.11

urllib3>=2.1.0

greenlet==3.0.3

zope.event==5.0

zope.interface==6.1

prettytable~=3.5.0

jsonschema~=4.21.1

jsonlines~=4.0.0

thefuzz~=0.22.1

pyarrow~=15.0.0

pydantic~=2.6.3

sacrebleu~=2.4.2

rouge_score~=0.1.2

pillow~=10.3.0

requests~=2.31.0

matplotlib>=1.3.0

text_generation~=0.7.0

numpy~=1.26.3

pandas~=2.1.4

transformers>=4.44.0

tritonclient[all]

colossalai==0.4.0

make

net-tools

protobuf==3.20

decorator>=4.4.0

sympy>=1.5.1

cffi>=1.12.3

attrs

cython

pyyaml

pathlib2

psutil

scipy

absl-py4. 安装torch-npu

pip install torch_npu-2.1.0.post10-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl5. 安装atb-models。注意这个文件解压到任何文件夹都可以,但是再次重启后可能会找不到,最好解压到Ascend文件夹下。

在 /usr/local/Ascend 在创建 llm_model 文件夹,然后解压

tar -xzf Ascend-mindie-atb-models_1.0.0_linux-aarch64_py310_torch2.1.0-abi0.tar.gz -C /usr/local/Ascend/llm_model安装:pip install atb_llm-0.0.1-py3-none-any.whl

配置环境变量:source set_env.sh

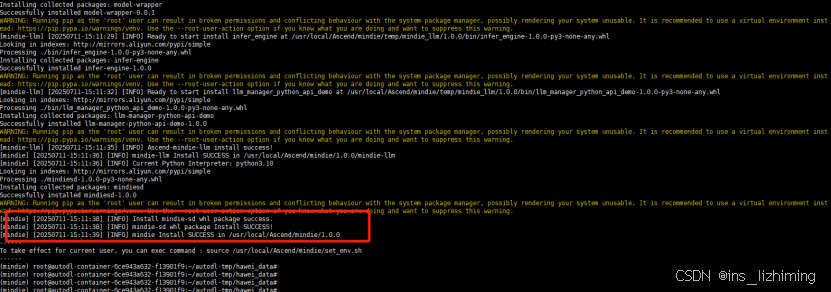

6. 安装mindie

chmod +x Ascend-mindie_1.0.0_linux-aarch64.run

source /usr/local/Ascend/ascend-toolkit/set_env.sh

检查一致性:./Ascend-mindie_1.0.0_linux-aarch64.run --chec

安装:./Ascend-mindie_1.0.0_linux-aarch64.run --install --quiet安装成功示例图:

配置环境变量:source /usr/local/Ascend/mindie/set_env.sh

7. 配置mindie

cd /usr/local/Ascend/mindie/latest

执行权限修改:

chmod 750 mindie-service

chmod -R 550 mindie-service/bin

chmod -R 500 mindie-service/bin/mindie_llm_backend_connector

chmod 550 mindie-service/lib

chmod 440 mindie-service/lib/*

chmod 550 mindie-service/lib/grpc

chmod 440 mindie-service/lib/grpc/*

chmod -R 550 mindie-service/include

chmod -R 550 mindie-service/scripts

chmod 750 mindie-service/logs

chmod 750 mindie-service/conf

chmod 640 mindie-service/conf/config.json

chmod 700 mindie-service/security

chmod -R 700 mindie-service/security/*修改配置文件

cd /usr/local/Ascend/mindie/latest/mindie-service/conf

vi config.json

修改参数:

httpsEnabled:false

npuDeviceIds :[[0,1,2,3]] 根据实际卡数

worldSize:4 与卡数一致

modelName:模型名称

modelWeightPath:模型路径

maxSeqLen:最大上下文 示例 25600

maxInputTokenLen:输入token 示例 20480

maxPrefillTokens:这个值应该大于maxInputTokenLen 示例 81920在mindie-service下执行 chmod +w set_env.sh

source /usr/local/Ascend/ascend-toolkit/set_env.sh

source /usr/local/Ascend/nnal/atb/set_env.sh

source /usr/local/Ascend/llm_model/set_env.sh

source mindie-service/set_env.sh注意:模型文件需要750权限

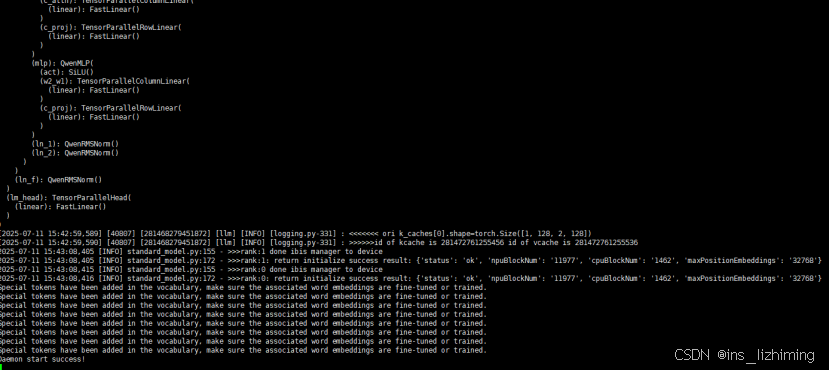

chmod -R 750 Qwen2.5-7B-Instruct/8. 启动运行

运行:./bin/mindieservice_daemon成功示例图:

9. 测试curl:

curl http://127.0.0.1:1025/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "qwen25",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "请介绍一下河南"}

]

}'10. 附加资料内容可作为参考:

config.json :

{

"Version" : "1.1.0",

"LogConfig" :

{

"logLevel" : "Info",

"logFileSize" : 20,

"logFileNum" : 20,

"logPath" : "logs/mindservice.log"

},

"ServerConfig" :

{

"ipAddress" : "127.0.0.1",

"managementIpAddress" : "127.0.0.2",

"port" : 1025,

"managementPort" : 1026,

"metricsPort" : 1027,

"allowAllZeroIpListening" : false,

"maxLinkNum" : 1000,

"httpsEnabled" : false,

"fullTextEnabled" : false,

"tlsCaPath" : "security/ca/",

"tlsCaFile" : ["ca.pem"],

"tlsCert" : "security/certs/server.pem",

"tlsPk" : "security/keys/server.key.pem",

"tlsPkPwd" : "security/pass/key_pwd.txt",

"tlsCrlPath" : "security/certs/",

"tlsCrlFiles" : ["server_crl.pem"],

"managementTlsCaFile" : ["management_ca.pem"],

"managementTlsCert" : "security/certs/management/server.pem",

"managementTlsPk" : "security/keys/management/server.key.pem",

"managementTlsPkPwd" : "security/pass/management/key_pwd.txt",

"managementTlsCrlPath" : "security/management/certs/",

"managementTlsCrlFiles" : ["server_crl.pem"],

"kmcKsfMaster" : "tools/pmt/master/ksfa",

"kmcKsfStandby" : "tools/pmt/standby/ksfb",

"inferMode" : "standard",

"interCommTLSEnabled" : true,

"interCommPort" : 1121,

"interCommTlsCaPath" : "security/grpc/ca/",

"interCommTlsCaFiles" : ["ca.pem"],

"interCommTlsCert" : "security/grpc/certs/server.pem",

"interCommPk" : "security/grpc/keys/server.key.pem",

"interCommPkPwd" : "security/grpc/pass/key_pwd.txt",

"interCommTlsCrlPath" : "security/grpc/certs/",

"interCommTlsCrlFiles" : ["server_crl.pem"],

"openAiSupport" : "vllm"

},

"BackendConfig" : {

"backendName" : "mindieservice_llm_engine",

"modelInstanceNumber" : 1,

"npuDeviceIds" : [[0,1]],

"tokenizerProcessNumber" : 8,

"multiNodesInferEnabled" : false,

"multiNodesInferPort" : 1120,

"interNodeTLSEnabled" : true,

"interNodeTlsCaPath" : "security/grpc/ca/",

"interNodeTlsCaFiles" : ["ca.pem"],

"interNodeTlsCert" : "security/grpc/certs/server.pem",

"interNodeTlsPk" : "security/grpc/keys/server.key.pem",

"interNodeTlsPkPwd" : "security/grpc/pass/mindie_server_key_pwd.txt",

"interNodeTlsCrlPath" : "security/grpc/certs/",

"interNodeTlsCrlFiles" : ["server_crl.pem"],

"interNodeKmcKsfMaster" : "tools/pmt/master/ksfa",

"interNodeKmcKsfStandby" : "tools/pmt/standby/ksfb",

"ModelDeployConfig" :

{

"maxSeqLen" : 25600,

"maxInputTokenLen" : 20480,

"truncation" : false,

"ModelConfig" : [

{

"modelInstanceType" : "Standard",

"modelName" : "qwen25",

"modelWeightPath" : "/root/autodl-tmp/Qwen2.5-7B-Instruct",

"worldSize" : 2,

"cpuMemSize" : 5,

"npuMemSize" : -1,

"backendType" : "atb",

"trustRemoteCode" : false

}

]

},

"ScheduleConfig" :

{

"templateType" : "Standard",

"templateName" : "Standard_LLM",

"cacheBlockSize" : 128,

"maxPrefillBatchSize" : 50,

"maxPrefillTokens" : 81920,

"prefillTimeMsPerReq" : 150,

"prefillPolicyType" : 0,

"decodeTimeMsPerReq" : 50,

"decodePolicyType" : 0,

"maxBatchSize" : 200,

"maxIterTimes" : 512,

"maxPreemptCount" : 0,

"supportSelectBatch" : false,

"maxQueueDelayMicroseconds" : 5000

}

}

}11. 报错提示:

[ERROR] [infer_backend_manager.cpp:80] : Failed to open backend library: libmindie_llm_manager.so: cannot open shared object file: No such file or directory

fail to create engine mindieservice_llm_engine

Failed to create backend manager解决:

请重新执行一编source相关的命令

source /usr/local/Ascend/ascend-toolkit/set_env.sh

source /usr/local/Ascend/mindie/set_env.sh

source /usr/local/Ascend/ascend-toolkit/set_env.sh

source /usr/local/Ascend/nnal/atb/set_env.sh

source /usr/local/Ascend/llm_model/set_env.sh

source mindie-service/set_env.sh

然后再启动 ./bin/mindieservice_daemon

鲲鹏昇腾开发者社区是面向全社会开放的“联接全球计算开发者,聚合华为+生态”的社区,内容涵盖鲲鹏、昇腾资源,帮助开发者快速获取所需的知识、经验、软件、工具、算力,支撑开发者易学、好用、成功,成为核心开发者。

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)