当互联网遇上AI存储革命:3FS+鲲鹏,如何打造高性能数据引擎?

记在鲲鹏平台Arm架构上的3FS编译部署以及测试实践

当互联网遇上AI存储革命:3FS+鲲鹏,如何打造高性能数据引擎?

——记3FS存储引擎在鲲鹏平台的适配与实践

AI时代的存储困境与3FS的破局

在AI大模型训练与推理的浪潮中,数据存储的吞吐量和延迟成为制约效率的关键瓶颈。传统存储架构难以应对PB级数据的实时处理需求,而商业解决方案如DDN、VAST Data虽性能强劲,却因闭源和高成本令中小企业望而却步。2025年2月,DeepSeek开源的高性能并行文件系统3FS(Fire-Flyer File System)应运而生,以6.6 TiB/s的读取吞吐量和强一致性语义,直击AI数据访问痛点,被业界誉为“颠覆存储架构”的里程碑。

3FS的核心优势在于:

- 全流程AI优化:覆盖数据预处理、训练样本加载、检查点恢复、推理KVCache缓存,实现“存算一体”加速;

- 分离式架构:整合数千块SSD与RDMA网络带宽,无需数据局部性依赖;

- 开源生态:填补高性能并行文件系统的开源空白,降低企业技术门槛。

为什么选择鲲鹏平台?

鲲鹏平台凭借其ARM架构的高性能、高能效和生态支持,成为AI和大数据场景的理想选择。鲲鹏处理器的多核设计和强大的计算能力,能够完美适配3FS的高性能需求。此外,鲲鹏平台与昇腾处理器的协同作战能力,为AI场景提供了更强大的算力支持。

实践:3FS与鲲鹏ARM平台的“化学反应”

我们选择TaiShan服务器进行深度适配,以下是关键步骤与成果:

存储引擎移植:从x86到ARM的适配

- 代码适配:优化代码编译工具链、解决X86与ARM系统的地址位偏移差异,以适配ARM平台上openEuler操作系统。

- 完善部署流程:参考官方文档,对部署流程进行补充,完善3FS在ARM上的部署体验。

一、部署环境

-

节点信息

Node IP Memory NVMe SSD RDMA OS meta 192.168.65.10 256GB - 100GE ConnectX-6 OpenEuler 22.03 SP4 fuseclient1 192.168.65.11 256GB - 100GE ConnectX-6 OpenEuler 22.03 SP4 fuseclient2 192.168.65.12 256GB - 100GE ConnectX-6 OpenEuler 22.03 SP4 fuseclient3 192.168.65.13 256GB - 100GE ConnectX-6 OpenEuler 22.03 SP4 storage1 192.168.65.14 1TB 3TB*8 100GE ConnectX-6 OpenEuler 22.03 SP4 storage2 192.168.65.15 1TB 3TB*8 100GE ConnectX-6 OpenEuler 22.03 SP4 storage3 192.168.65.16 1TB 3TB*8 100GE ConnectX-6 OpenEuler 22.03 SP4 -

服务信息

Service Binary Config files NodeID Node monitor monitor_collector_main monitor_collector_main.toml - meta admin_cli admin_cli admin_cli.toml fdb.cluster - meta storage1 storage2 storage3 mgmtd mgmtd_main mgmtd_main_launcher.toml mgmtd_main.toml mgmtd_main_app.toml fdb.cluster 1 meta meta meta_main meta_main_launcher.toml meta_main.toml meta_main_app.toml fdb.cluster 100 meta storage storage_main storage_main_launcher.toml storage_main.toml storage_main_app.toml 10001~10003 storage1 storage2 storage3 client hf3fs_fuse_main hf3fs_fuse_main_launcher.toml hf3fs_fuse_main.toml - meta

二、环境准备

关闭防火墙与设置seliunx模式

**注意:以下操作在所有节点进行操作**

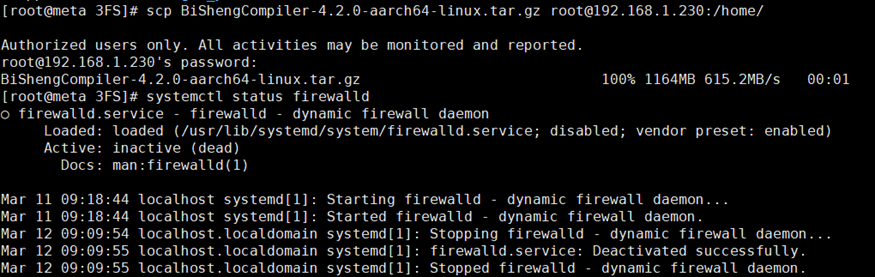

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld

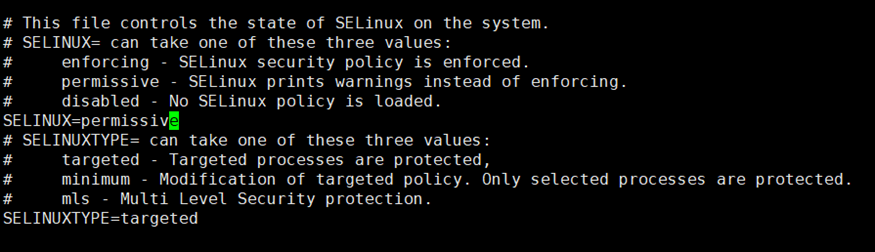

设置selinux为permissive模式

vi /etc/selinux/config

SELINUX=permissive

设置Hosts

**注意:以下操作在所有节点进行操作**

将 meta 对应的 ip 填入每个节点的 /etc/hosts

vi /etc/hosts

192.168.65.10 meta

集群时间同步

**注意:以下操作在所有节点进行操作**

yum install -y ntp ntpdate

在所有节点备份旧配置

cd /etc && mv ntp.conf ntp.conf.bak

**注意:以下操作在META节点进行操作**

vi /etc/ntp.conf

restrict 127.0.0.1

restrict ::1

restrict 192.168.65.10 mask 255.255.255.0

server 127.127.1.0

fudge 127.127.1.0 stratum 8

# Hosts on local network are less restricted.

restrict 192.168.65.10 mask 255.255.255.0 nomodify notrap

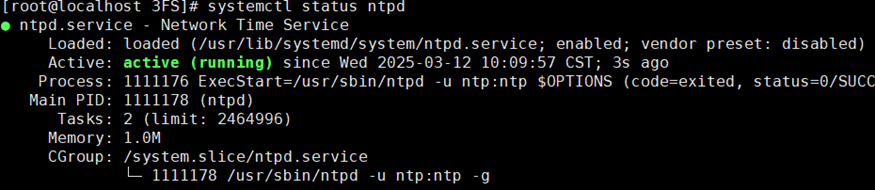

开启ntpd服务

systemctl start ntpd

systemctl enable ntpd

systemctl status ntpd

**注意:以下操作在除META外的节点进行操作**

vi /etc/ntp.conf

server 192.168.65.10

在除 meta的所有节点强制同步server时间。这里需要等待5min,否则会报错(no server suitable for synchronization found)

ntpdate meta

在除 meta的所有节点写入硬件时钟,避免重启后失效

hwclock -w

安装并启动crontab工具。

yum install -y crontabs

chkconfig crond on

systemctl start crond

crontab -e

每隔10分钟与meta节点同步一次时间

*/10 * * * * /usr/sbin/ntpdate 192.168.65.10

三、编译3FS

1.1 构建编译环境

这里我们选择meta节点作为编译节点

① 安装编译所需的依赖

**注意:在所有节点进行操作**

# for openEuler

yum install cmake libuv-devel lz4-devel xz-devel double-conversion-devel libdwarf libdwarf-devel libunwind libunwind-devel libaio-devel gflags-devel glog glog-devel gtest-devel gmock-devel gperftools-devel gperftools openssl-devel gcc gcc-c++ boost* libatomic autoconf libevent-devel libibverbs libibverbs-devel cargo numactl-devel lld gperftools-devel gperftools double-conversion libibverbs rsync gperftools-libs glibc-devel python3-devel meson vim jemalloc -y

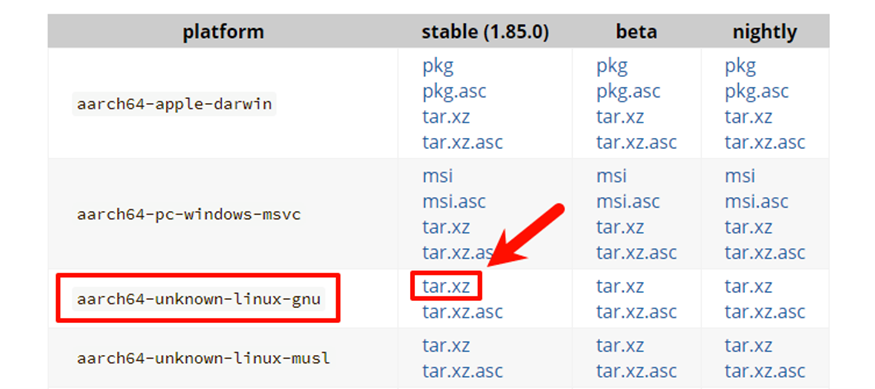

② 安装Rust

**注意:在META节点进行操作**

前往Rust官网下载aarch64架构对应的压缩包,并上传到服务器进行解压。

如果你的服务器可以直接连接Rust官网,可以通过wget命令获取对应的压缩包。

wget https://static.rust-lang.org/dist/rust-1.85.0-aarch64-unknown-linux-gnu.tar.xz

tar -xvf rust-1.85.0-aarch64-unknown-linux-gnu.tar.xz

cd rust-1.85.0-aarch64-unknown-linux-gnu

sh install.sh

③ 安装foundationdb

**注意:以下操作在META节点进行操作**

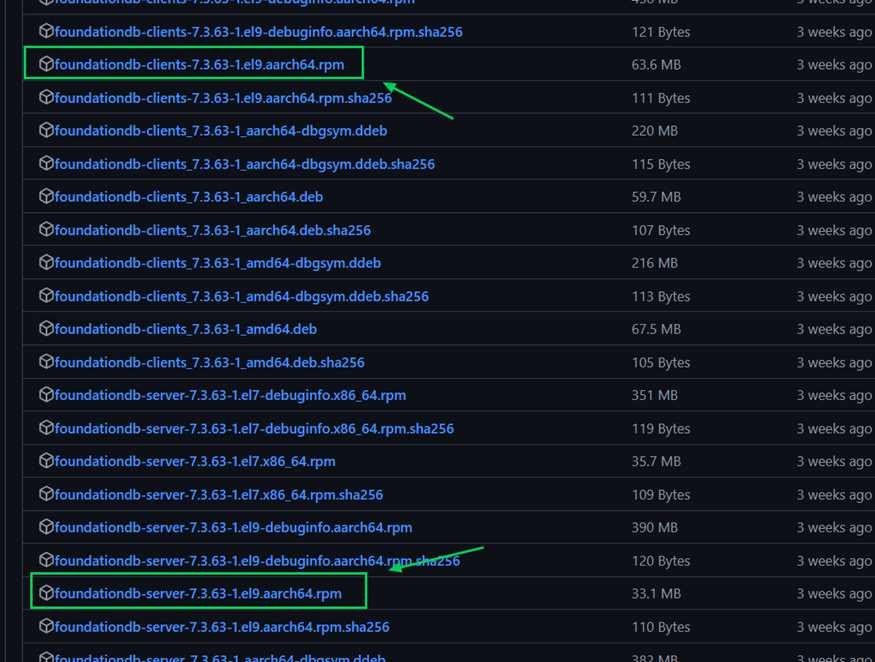

前往foundationdb/Github代码仓获取foundationdb安装包,并上传到服务器进行安装。

如果你的服务器可以直接链接GitHub,可以通过wget或者yum命令安装对应的安装包。

# 通过wget下载

wget https://github.com/apple/foundationdb/releases/download/7.3.63/foundationdb-clients-7.3.63-1.el9.aarch64.rpm

wget https://github.com/apple/foundationdb/releases/download/7.3.63/foundationdb-server-7.3.63-1.el9.aarch64.rpm

rpm -ivh foundationdb-clients-7.3.63-1.el9.aarch64.rpm

rpm -ivh foundationdb-server-7.3.63-1.el9.aarch64.rpm

# 或者通过yum下载

yum install https://github.com/apple/foundationdb/releases/download/7.3.63/foundationdb-clients-7.3.63-1.el9.aarch64.rpm -y

yum install https://github.com/apple/foundationdb/releases/download/7.3.63/foundationdb-server-7.3.63-1.el9.aarch64.rpm -y

④ 安装毕昇编译器

**注意:以下操作在META节点进行操作**

-

可以前往毕昇编译器官网下载4.2.0版本编译器压缩包,上传到服务器进行解压。

或者通过

wget命令直接获取对应的压缩包:cd /home wget https://mirrors.huaweicloud.com/kunpeng/archive/compiler/bisheng_compiler/BiShengCompiler-4.2.0-aarch64-linux.tar.gz tar -xvf BiShengCompiler-4.2.0-aarch64-linux.tar.gz -

临时配置环境变量:

export PATH=/home/BiShengCompiler-4.2.0-aarch64-linux/bin:$PATH -

查看编译器版本:

clang -v BiSheng Enterprise 4.2.0.B009 clang version 17.0.6 (958fd14d28f0) Target: aarch64-unknown-linux-gnu Thread model: posix InstalledDir: /home/BiShengCompiler-4.2.0-aarch64-linux/bin Found candidate GCC installation: /usr/lib/gcc/aarch64-linux-gnu/10.3.1 Selected GCC installation: /usr/lib/gcc/aarch64-linux-gnu/10.3.1 Candidate multilib: .;@m64 Selected multilib: .;@m64

⑤安装libfuse

**注意:以下操作在META节点和Client节点进行操作**

Release libfuse 3.16.1 · libfuse/libfuse · GitHub

# 下载包

cd /home

wget https://github.com/libfuse/libfuse/releases/download/fuse-3.16.1/fuse-3.16.1.tar.gz

# 解压包

tar vzxf fuse-3.16.1.tar.gz

cd fuse-3.16.1

mkdir build

cd build

yum install -y meson

meson setup ..

ninja

ninja install

1.2 获取源码及编译

**注意:以下操作在META节点进行操作**

export LD_LIBRARY_PATH=/usr/local/lib64:/usr/local/lib:/usr/local:/usr/lib:/usr/lib64:$LD_LIBRARY_PATH

yum install git -y

cd /home

git clone https://gitee.com/kunpeng_compute/3FS.git

cd 3FS

git checkout origin/openeuler

git submodule update --init --recursive

./patches/apply.sh

cmake -S . -B build -DCMAKE_CXX_COMPILER=clang++ -DCMAKE_C_COMPILER=clang -DCMAKE_BUILD_TYPE=RelWithDebInfo -DCMAKE_EXPORT_COMPILE_COMMANDS=ON

cmake --build build -j

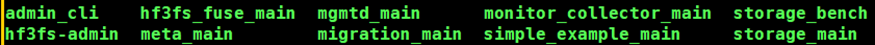

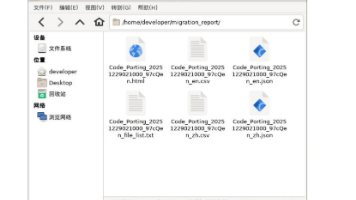

检查编辑结果

cd build/bin

ll

三、部署3FS

3.1 Meta节点

① 安装ClickHouse

**注意:以下操作在META节点进行操作**

-

获取安装包并安装

cd /home curl -k https://clickhouse.com/ | sh sudo ./clickhouse install安装完成时会让我们输入密码,假设我们输入的密码为

clickhouse123 -

修改ClickHouse默认端口:

chmod 660 /etc/clickhouse-server/config.xml vim /etc/clickhouse-server/config.xml定位到

<tcp_port>标签 将端口号修改为9123:... <tcp_port>9123</tcp_port> ... -

启动clickhouse

clickhouse start -

创建Metric table

clickhouse-client --port 9123 --password 'clickhouse123' -n < /home/3FS/deploy/sql/3fs-monitor.sql

② 更新FoundationDB配置

**注意:以下操作在META节点进行操作**

-

更新FoundationDB的配置

vim /etc/foundationdb/foundationdb.conf # 定位到 [fdbserver] 下的 public-address 配置项,将其修改为 本机IP:$ID # 如Meta节点Ip为192.168.65.10,则修改为 192.168.65.10:$IDvim /etc/foundationdb/fdb.cluster # 将文件中的 127.0.0.1:4500 修改为本机IP:4500 # 如Meta节点Ip为192.168.65.10,则修改为 192.168.65.10:4500 -

重启FoundationDB服务

systemctl restart foundationdb.service -

查看FoundationDB服务端口

ss -tuln # Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process # ... # tcp LISTEN 0 4096 192.168.65.10:4500 0.0.0.0:* # ...

③ 启动monitor_collector

**注意:以下操作在META节点进行操作**

-

从编译服务器获取二进制文件以及配置文件,本文编译节点为

Meta节点:mkdir -p /var/log/3fs mkdir -p /opt/3fs/{bin,etc} rsync -avz meta:/home/3FS/build/bin/monitor_collector_main /opt/3fs/bin/ rsync -avz meta:/home/3FS/configs/monitor_collector_main.toml /opt/3fs/etc/ rsync -avz meta:/home/3FS/deploy/systemd/monitor_collector_main.service /usr/lib/systemd/system -

修改配置文件

monitor_collector_main.toml:vim /opt/3fs/etc/monitor_collector_main.toml... [server.base.groups.listener] filter_list = ['enp1s0f0np0'] # 查询RDMA网卡名填入 listen_port = 10000 listen_queue_depth = 4096 rdma_listen_ethernet = true reuse_port = false ... [server.monitor_collector.reporter.clickhouse] db = '3fs' host = '127.0.0.1' passwd = 'clickhouse123' port = '9123' user = 'default' -

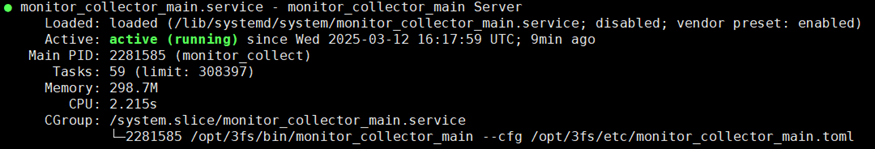

启动monitor_collector服务:

systemctl start monitor_collector_main -

检查monitor_collector状态:

systemctl status monitor_collector_main

-

检查端口情况:

ss -tuln # Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process # ... # tcp LISTEN 0 4096 192.168.65.10:10000 0.0.0.0:* # ...**注意:**这里只能有一个

192.168.65.10:10000不能存在127.0.0.1:10000避免其他服务连接端口错误。如果存在多个10000端口,请检查上面monitor_collector_main.toml文件中是否填写filter_list配置项,下不赘述。

④ 安装Admin Client

**注意:以下操作在所有节点进行操作**

-

从编译服务器获取二进制文件以及配置文件:

mkdir -p /var/log/3fs mkdir -p /opt/3fs/{bin,etc} rsync -avz meta:/home/3FS/build/bin/admin_cli /opt/3fs/bin rsync -avz meta:/home/3FS/configs/admin_cli.toml /opt/3fs/etc rsync -avz meta:/etc/foundationdb/fdb.cluster /opt/3fs/etc rsync -avz meta:/usr/lib64/libfdb_c.so /usr/lib64/ -

更新配置文件

admin_cli.toml:vim /opt/3fs/etc/admin_cli.toml... cluster_id = "stage" ... [fdb] clusterFile = '/opt/3fs/etc/fdb.cluster' [mgmtd_client] mgmtd_server_addresses = ["RDMA://192.168.65.10:8000"] ... -

查看帮助

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml help

⑤ 启动Mgmtd Service

**注意:以下操作在META节点进行操作**

-

从编译服务器获取二进制文件以及配置文件:

mkdir -p /var/log/3fs mkdir -p /opt/3fs/{bin,etc} rsync -avz meta:/home/3FS/build/bin/mgmtd_main /opt/3fs/bin rsync -avz meta:/home/3FS/configs/{mgmtd_main.toml,mgmtd_main_launcher.toml,mgmtd_main_app.toml} /opt/3fs/etc rsync -avz meta:/home/3FS/deploy/systemd/mgmtd_main.service /usr/lib/systemd/system -

修改配置文件

mgmtd_main_app.toml:vim /opt/3fs/etc/mgmtd_main_app.tomlallow_empty_node_id = true node_id = 1 # 修改node_id 为1 -

修改配置文件

mgmtd_main_launcher.toml:vim /opt/3fs/etc/mgmtd_main_launcher.toml... cluster_id = "stage" ... [fdb] clusterFile = '/opt/3fs/etc/fdb.cluster' ... -

修改配置文件

mgmtd_main.toml:vim /opt/3fs/etc/mgmtd_main.toml... [server.base.groups.listener] filter_list = ['enp1s0f0np0'] # 查询RDMA网卡名填入 ... [server.base.groups.listener] filter_list = ['enp1s0f0np0'] # 查询RDMA网卡名填入 ... [common.monitor.reporters.monitor_collector] remote_ip = "192.168.65.10:10000" # monitor_collector节点ip及端口 ... -

初始化集群

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "init-cluster --mgmtd /opt/3fs/etc/mgmtd_main.toml 1 1048576 16" # Init filesystem, root directory layout: chain table ChainTableId(1), chunksize 1048576, stripesize 16 # # Init config for MGMTD version 1 -

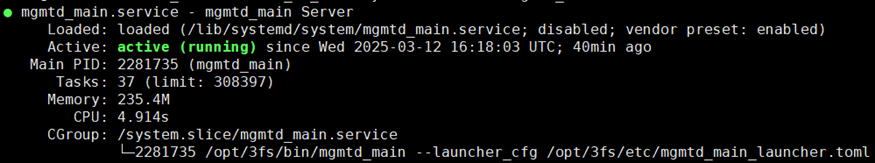

启动服务

systemctl start mgmtd_main -

检查服务状态

systemctl status mgmtd_main

-

检查端口

ss -tuln # Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process # ... # tcp LISTEN 0 4096 192.168.65.10:8000 0.0.0.0:* # tcp LISTEN 0 4096 192.168.65.10:9000 0.0.0.0:* # ... -

检查集群Nodes List

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "list-nodes" # Id Type Status Hostname Pid Tags LastHeartbeatTime ConfigVersion ReleaseVersion # 1 MGMTD PRIMARY_MGMTD meta 2281735 [] N/A 1(UPTODATE) 250228-dev-1-999999-923bdd7c

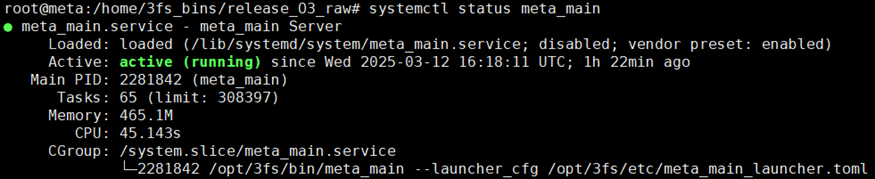

⑥ 启动Meta Server

**注意:以下操作在META节点进行操作**

-

从编译服务器获取二进制文件以及配置文件:

mkdir -p /var/log/3fs mkdir -p /opt/3fs/{bin,etc} rsync -avz meta:/home/3FS/build/bin/meta_main /opt/3fs/bin rsync -avz meta:/home/3FS/configs/{meta_main_launcher.toml,meta_main.toml,meta_main_app.toml} /opt/3fs/etc rsync -avz meta:/home/3FS/deploy/systemd/meta_main.service /usr/lib/systemd/system -

更新配置文件

meta_main_app.toml:vim /opt/3fs/etc/meta_main_app.tomlallow_empty_node_id = true node_id = 100 # 更新node_id -

更新配置文件

meta_main_launcher.toml:vim /opt/3fs/etc/meta_main_launcher.toml... cluster_id = "stage" ... [mgmtd_client] mgmtd_server_addresses = ["RDMA://192.168.65.10:8000"] ... -

更新配置文件

meta_main.toml:vim /opt/3fs/etc/meta_main.toml... [server.mgmtd_client] mgmtd_server_addresses = ["RDMA://192.168.65.10:8000"] [common.monitor.reporters.monitor_collector] remote_ip = "192.168.65.10:10000" ... [server.fdb] clusterFile = '/opt/3fs/etc/fdb.cluster' ... [server.base.groups.listener] filter_list = ['enp1s0f0np0'] listen_port = 8001 ... [server.base.groups.listener] filter_list = ['enp1s0f0np0'] listen_port = 9001 ... -

向Mgmtd Server更新Meta节点配置

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "set-config --type META --file /opt/3fs/etc/meta_main.toml" -

启动服务

systemctl start meta_main -

检查服务状态

systemctl status meta_main

-

检查端口

ss -tuln # Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process # ... # tcp LISTEN 0 4096 192.168.65.10:8001 0.0.0.0:* # tcp LISTEN 0 4096 192.168.65.10:9001 0.0.0.0:* # ... -

检查集群Nodes List

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "list-nodes" # Id Type Status Hostname Pid Tags LastHeartbeatTime ConfigVersion ReleaseVersion # 1 MGMTD PRIMARY_MGMTD meta 2281735 [] N/A 1(UPTODATE) 250228-dev-1-999999-923bdd7c # 100 META HEARTBEAT_CONNECTED meta 2281842 [] 2025-03-12 17:01:32 1(UPTODATE) 250228-dev-1-999999-923bdd7c

3.2 Storage节点

**注意:以下操作在Storage节点进行操作**

① SSD盘准备

-

查看可以用来挂载的硬盘

lsblk # NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS # ... # nvme0n1 259:0 0 2.9T 0 disk # nvme1n1 259:1 0 2.9T 0 disk # nvme2n1 259:2 0 2.9T 0 disk # nvme3n1 259:3 0 2.9T 0 disk # nvme4n1 259:4 0 2.9T 0 disk # nvme5n1 259:5 0 2.9T 0 disk # nvme6n1 259:6 0 2.9T 0 disk # nvme7n1 259:7 0 2.9T 0 disk # ... -

创建目录

mkdir -p /storage/data{0..7} mkdir -p /var/log/3fs -

格式化硬盘并进行挂载

注意!我们的环境8块NVMe盘是从

0~7连续的,可以直接使用下面的命令,大家根据自己的环境灵活调整命名,不要直接复制使用!for i in {0..7};do mkfs.xfs -L data${i} /dev/nvme${i}n1;mount -o noatime,nodiratime -L data${i} /storage/data${i};done mkdir -p /storage/data{0..7}/3fs -

检查格式化及挂载结果

lsblk # NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS # ... # nvme0n1 259:0 0 2.9T 0 disk /storage/data0 # nvme1n1 259:1 0 2.9T 0 disk /storage/data1 # nvme2n1 259:2 0 2.9T 0 disk /storage/data2 # nvme3n1 259:3 0 2.9T 0 disk /storage/data3 # nvme4n1 259:4 0 2.9T 0 disk /storage/data4 # nvme5n1 259:5 0 2.9T 0 disk /storage/data5 # nvme6n1 259:6 0 2.9T 0 disk /storage/data6 # nvme7n1 259:7 0 2.9T 0 disk /storage/data7 # ...

② 增加aio请求的最大数

sysctl -w fs.aio-max-nr=67108864

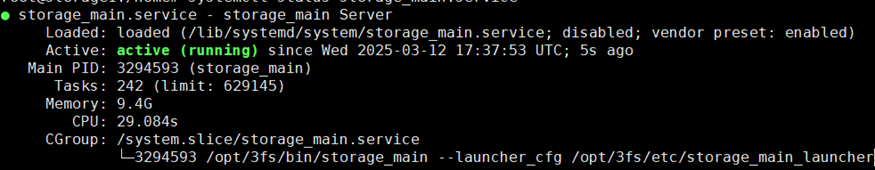

③ 启动Storage Server服务

-

从编译服务器获取二进制文件以及配置文件:

mkdir -p /opt/3fs/{bin,etc} mkdir -p /var/log/3fs rsync -avz meta:/home/3FS/build/bin/storage_main /opt/3fs/bin rsync -avz meta:/home/3FS/configs/{storage_main_launcher.toml,storage_main.toml,storage_main_app.toml} /opt/3fs/etc rsync -avz meta:/home/3FS/deploy/systemd/storage_main.service /usr/lib/systemd/system rsync -avz meta:/usr/lib64/libfdb_c.so /usr/lib64 -

更新配置文件

storage_main_app.toml:vim /opt/3fs/etc/storage_main_app.tomlallow_empty_node_id = true node_id = 10001 # 更新node_id 注意几个storage节点的node_list不同 -

更新配置文件

storage_main_launcher.toml:vim /opt/3fs/etc/storage_main_launcher.toml... cluster_id = "stage" ... [mgmtd_client] mgmtd_server_addresses = ["RDMA://192.168.65.10:8000"] ... -

更新配置文件

storage_main.toml:vim /opt/3fs/etc/storage_main.toml... [server.base.groups.listener] filter_list = ['enp133s0f0np0'] listen_port = 8000 ... [server.base.groups.listener] filter_list = ['enp133s0f0np0'] listen_port = 9000 ... [server.mgmtd] mgmtd_server_address = ["RDMA://192.168.65.10:8000"] ... [common.monitor.reporters.monitor_collector] remote_ip = "192.168.65.10:10000" ... [server.targets] target_paths = ["/storage/data0/3fs","/storage/data1/3fs","/storage/data2/3fs","/storage/data3/3fs","/storage/data4/3fs","/storage/data5/3fs","/storage/data6/3fs","/storage/data7/3fs"] ... -

向Mgmtd Server更新Storage节点配置

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "set-config --type STORAGE --file /opt/3fs/etc/storage_main.toml" -

启动服务

systemctl start storage_main -

检查服务状态

systemctl status storage_main

-

检查集群Nodes List

如果没有看到Storage节点,通常需要等待1~2分钟左右

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "list-nodes" # Id Type Status Hostname Pid Tags LastHeartbeatTime ConfigVersion ReleaseVersion # 1 MGMTD PRIMARY_MGMTD meta 2281735 [] N/A 1(UPTODATE) 250228-dev-1-999999-923bdd7c # 100 META HEARTBEAT_CONNECTED meta 2281842 [] 2025-03-12 17:01:32 1(UPTODATE) 250228-dev-1-999999-923bdd7c # 10001 STORAGE HEARTBEAT_CONNECTED storage1 3294593 [] 2025-03-12 17:38:13 1(UPTODATE) 250228-dev-1-999999-923bdd7c # 10002 STORAGE HEARTBEAT_CONNECTED storage2 476286 [] 2025-03-12 17:38:12 1(UPTODATE) 250228-dev-1-999999-923bdd7c # 10003 STORAGE HEARTBEAT_CONNECTED storage3 2173767 [] 2025-03-12 17:38:12 1(UPTODATE) 250228-dev-1-999999-923bdd7c

3.3 创建admin user、storage targets和chain table

**注意:以下操作在META节点进行操作**

-

创建admin user

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "user-add --root --admin 0 root" # Uid 0 # Name root # Token AAB7sN/h8QBs7/+B2wBQ03Lp(Expired at N/A) # IsRootUser true # IsAdmin true # Gid 0其中

AAB7sN/h8QBs7/+B2wBQ03Lp就是Token,将其保存到/opt/3fs/etc/token.txt中。 -

安装python依赖

pip3 install -r /home/3FS/deploy/data_placement/requirements.txt -

创建chain Table

cd /home python3 /home/3FS/deploy/data_placement/src/model/data_placement.py \ -ql -relax -type CR --num_nodes 3 --replication_factor 3 --min_targets_per_disk 6其中关注以下配置项:

--num_nodes:存储节点数量;--replication_factor:副本因子;

执行成功会在当前目录下生成一个

output/DataPlacementModel-v_*文件夹,如/home/output/DataPlacementModel-v_3-b_6-r_6-k_3-λ_3-lb_3-ub_3 -

创建chainTable

python3 /home/3FS/deploy/data_placement/src/setup/gen_chain_table.py \ --chain_table_type CR --node_id_begin 10001 --node_id_end 10003 \ --num_disks_per_node 8 --num_targets_per_disk 6 \ --target_id_prefix 1 --chain_id_prefix 9 \ --incidence_matrix_path /home/output/DataPlacementModel-v_3-b_6-r_6-k_3-λ_3-lb_3-ub_3/incidence_matrix.pickle其中关注以下配置项:

--node_id_begin:storage节点NodeId开始值;--node_id_end:storage节点NodeId结束值;--num_disks_per_node:每个存储节点挂载了几块硬盘;-num_targets_per_disk:每个挂载的硬盘有几个taget;--incidence_matrix_path:上一步生成文件路径;

执行成功后,查看output目录下是否产生了一下文件:

-rw-r--r-- 1 root root 2387 Mar 6 11:55 generated_chains.csv -rw-r--r-- 1 root root 488 Mar 6 11:55 generated_chain_table.csv -rw-r--r-- 1 root root 15984 Mar 6 11:55 remove_target_cmd.txt -

创建storage target

/opt/3fs/bin/admin_cli --cfg /opt/3fs/etc/admin_cli.toml --config.user_info.token $(<"/opt/3fs/etc/token.txt") < /home/output/create_target_cmd.txt -

上传chains到mgmtd service

/opt/3fs/bin/admin_cli --cfg /opt/3fs/etc/admin_cli.toml --config.user_info.token $(<"/opt/3fs/etc/token.txt") "upload-chains /home/output/generated_chains.csv" -

上传chain table到mgmtd service

/opt/3fs/bin/admin_cli --cfg /opt/3fs/etc/admin_cli.toml --config.user_info.token $(<"/opt/3fs/etc/token.txt") "upload-chain-table --desc stage 1 /home/output/generated_chain_table.csv" -

查看list-chains

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "list-chains" # ChainId ReferencedBy ChainVersion Status PreferredOrder Target Target Target # 900100001 1 1 SERVING [] 101000300101(SERVING-UPTODATE) 101000200101(SERVING-UPTODATE) 101000100101(SERVING-UPTODATE) # 900100002 1 1 SERVING [] 101000300102(SERVING-UPTODATE) 101000200102(SERVING-UPTODATE) 101000100102(SERVING-UPTODATE) # ...

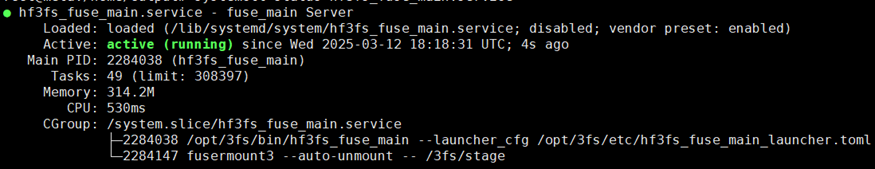

3.4 FUSE Client节点

**注意:在FUSE Client节点进行操作**

-

从编译服务器获取二进制文件以及配置文件:

mkdir -p /var/log/3fs mkdir -p /opt/3fs/{bin,etc} rsync -avz meta:/home/3FS/build/bin/hf3fs_fuse_main /opt/3fs/bin rsync -avz meta:/home/3FS/build/bin/admin_cli /opt/3fs/bin rsync -avz meta:/home/3FS/configs/{hf3fs_fuse_main_launcher.toml,hf3fs_fuse_main.toml,hf3fs_fuse_main_app.toml} /opt/3fs/etc rsync -avz meta:/home/3FS/deploy/systemd/hf3fs_fuse_main.service /usr/lib/systemd/system rsync -avz meta:/opt/3fs/etc/token.txt /opt/3fs/etc rsync -avz meta:/usr/lib64/libfdb_c.so /usr/lib64 -

创建挂载点

mkdir -p /3fs/stage -

更新配置文件

hf3fs_fuse_main_launcher.toml:vim /opt/3fs/etc/hf3fs_fuse_main_launcher.toml... cluster_id = "stage" mountpoint = '/3fs/stage' token_file = '/opt/3fs/etc/token.txt' ... [mgmtd_client] mgmtd_server_addresses = ["RDMA://192.168.65.10:8000"] ... -

更新配置文件

hf3fs_fuse_main.toml:vim /opt/3fs/etc/hf3fs_fuse_main.toml... [mgmtd] mgmtd_server_addresses = ["RDMA://192.168.65.10:8000"] ... [common.monitor.reporters.monitor_collector] remote_ip = "192.168.65.10:10000" ... -

更新配置:

/opt/3fs/bin/admin_cli -cfg /opt/3fs/etc/admin_cli.toml "set-config --type FUSE --file /opt/3fs/etc/hf3fs_fuse_main.toml" -

启动服务

systemctl start hf3fs_fuse_main -

检查服务状态

systemctl status hf3fs_fuse_main

-

检查挂载点

df -h # Filesystem Size Used Avail Use% Mounted on # ... # hf3fs.stage 70T 650G 70T 1% /3fs/stage

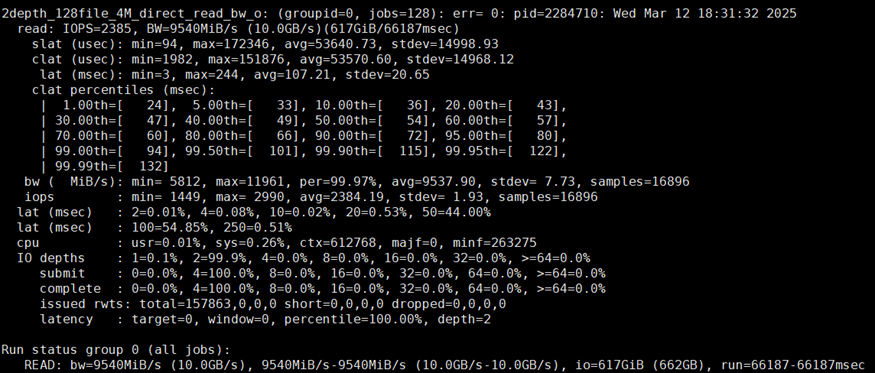

四、测试3FS

使用fio在3个客户端对3FS进行并发读取测试:

yum install fio -y

fio -numjobs=128 -fallocate=none -iodepth=2 -ioengine=libaio -direct=1 \

-rw=read -bs=4M --group_reporting -size=100M -time_based -runtime=3000 \

-name=2depth_128file_4M_direct_read_bw -directory=/3fs/stage

4M并发读测试下,每个客户端都能达到10GB/s的带宽。

五、展望——3FS+鲲鹏,AI基建的新范式

此次适配不仅验证了3FS在ARM生态的成熟度,更揭示了AI技术栈的无限可能。

3FS的高性能与开源属性,为AI时代的数据引擎提供了新方案。我们期待,这场由3FS引领的存储革命,将加速AI基础设施的全面崛起。

3FS在鲲鹏平台上的成功实践,不仅展现了其在高性能存储领域的潜力,也为AI和大数据场景提供了新的选择。未来,随着技术的不断进步和生态的完善,3FS有望在更多领域中大放异彩,成为存储领域的标杆。

持续关注我们,获取更多3FS在鲲鹏平台的优化实践案例!

鲲鹏昇腾开发者社区是面向全社会开放的“联接全球计算开发者,聚合华为+生态”的社区,内容涵盖鲲鹏、昇腾资源,帮助开发者快速获取所需的知识、经验、软件、工具、算力,支撑开发者易学、好用、成功,成为核心开发者。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)