【CANN训练营】基于CANN8.0.0.alpha003学习Ascend C上Add算子基于Kernel直调工程的算子开发

Add算子介绍

算子的功能

Add算子开发实现的是固定shape为8*2048的Add算子,

Add算子的数学表达式为:

z = x + y

算子的计算逻辑

Ascend C提供的矢量计算接口的操作元素都为LocalTensor,输入数据需要先搬运进片上存储,然后使用计算接口完成两个输入参数相加,得到最终结果,再搬出到外部存储上。

Add算子的实现流程分为3个基本任务:CopyIn,Compute,CopyOut。CopyIn任务负责将Global Memory上的输入Tensor xGm和yGm搬运到Local Memory,分别存储在xLocal、yLocal,Compute任务负责对xLocal、yLocal执行加法操作,计算结果存储在zLocal中,CopyOut任务负责将输出数据从zLocal搬运至Global Memory上的输出Tensor zGm中。

算子的调用实现

CPU侧运行验证主要通过ICPU_RUN_KF CPU调测宏等CPU调测库提供的接口来完成;

NPU侧运行验证主要通过使用ACLRT_LAUNCH_KERNEL内核调用宏来完成。

算子开发实践

开发体验环境选择

依旧使用了比较方便和在算子开发学习上比较给力的华为云开发者空间进行实践,每天可以免费使用2小时还是很便利的

地址:https://developer.huaweicloud.com/space/home

环境版本信息

NPU:Ascend910B3

CANN:CANN8.0.0.alpha003

安装 CANN

wget https://ascend-repo.obs.cn-east-2.myhuaweicloud.com/Milan-ASL/Milan-ASL%20V100R001C20SPC703/Ascend-cann-toolkit_8.0.0.alpha003_linux-aarch64.run

bash Ascend-cann-toolkit_8.0.0.alpha003_linux-aarch64.run --full

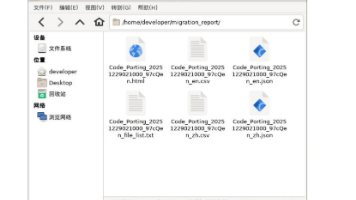

算子开发文件介绍

核函数实现文件:add_custom.cpp

调用算子的应用程序:main.cpp。

输入数据和真值数据生成脚本文件:gen_data.py。

验证输出数据和真值数据是否一致的验证脚本:verify_result.py。

编译cpu侧或npu侧运行的算子的编译工程文件:CMakeLists.txt。

编译运行算子的脚本:run.sh。

核函数开发(add_custom.cpp)

核函数定义并调用调用算子类的Init和Process函数

extern "C" __global__ __aicore__ void add_custom(GM_ADDR x, GM_ADDR y, GM_ADDR z)

{

KernelAdd op;

op.Init(x, y, z);

op.Process();

}

使用GM_ADDR宏来修饰入参

#define GM_ADDR __gm__ uint8_t*

定义KernelAdd算子类

class KernelAdd {

public:

__aicore__ inline KernelAdd(){}

// 初始化函数,完成内存初始化相关操作

__aicore__ inline void Init(GM_ADDR x, GM_ADDR y, GM_ADDR z){}

// 核心处理函数,实现算子逻辑,调用私有成员函数CopyIn、Compute、CopyOut完成矢量算子的三级流水操作

__aicore__ inline void Process(){}

private:

// 搬入函数,完成CopyIn阶段的处理,被核心Process函数调用

__aicore__ inline void CopyIn(int32_t progress){}

// 计算函数,完成Compute阶段的处理,被核心Process函数调用

__aicore__ inline void Compute(int32_t progress){}

// 搬出函数,完成CopyOut阶段的处理,被核心Process函数调用

__aicore__ inline void CopyOut(int32_t progress){}

private:

AscendC::TPipe pipe; //Pipe内存管理对象

AscendC::TQue<AscendC::QuePosition::VECIN, BUFFER_NUM> inQueueX, inQueueY; //输入数据Queue队列管理对象,QuePosition为VECIN

AscendC::TQue<AscendC::QuePosition::VECOUT, BUFFER_NUM> outQueueZ; //输出数据Queue队列管理对象,QuePosition为VECOUT

AscendC::GlobalTensor<half> xGm; //管理输入输出Global Memory内存地址的对象,其中xGm, yGm为输入,zGm为输出

AscendC::GlobalTensor<half> yGm;

AscendC::GlobalTensor<half> zGm;

};

初始化函数代码

#include "kernel_operator.h"

constexpr int32_t TOTAL_LENGTH = 8 * 2048; // total length of data

constexpr int32_t USE_CORE_NUM = 8; // num of core used

constexpr int32_t BLOCK_LENGTH = TOTAL_LENGTH / USE_CORE_NUM; // length computed of each core

constexpr int32_t TILE_NUM = 8; // split data into 8 tiles for each core

constexpr int32_t BUFFER_NUM = 2; // tensor num for each queue

constexpr int32_t TILE_LENGTH = BLOCK_LENGTH / TILE_NUM / BUFFER_NUM; // separate to 2 parts, due to double buffer

__aicore__ inline void Init(GM_ADDR x, GM_ADDR y, GM_ADDR z)

{

// get start index for current core, core parallel

xGm.SetGlobalBuffer((__gm__ half*)x + BLOCK_LENGTH * GetBlockIdx(), BLOCK_LENGTH);

yGm.SetGlobalBuffer((__gm__ half*)y + BLOCK_LENGTH * GetBlockIdx(), BLOCK_LENGTH);

zGm.SetGlobalBuffer((__gm__ half*)z + BLOCK_LENGTH * GetBlockIdx(), BLOCK_LENGTH);

// pipe alloc memory to queue, the unit is Bytes

pipe.InitBuffer(inQueueX, BUFFER_NUM, TILE_LENGTH * sizeof(half));

pipe.InitBuffer(inQueueY, BUFFER_NUM, TILE_LENGTH * sizeof(half));

pipe.InitBuffer(outQueueZ, BUFFER_NUM, TILE_LENGTH * sizeof(half));

}

基于矢量编程范式,将核函数的实现分为3个基本任务:CopyIn,Compute,CopyOut。Process函数中通过如下方式调用这三个函数

__aicore__ inline void Process()

{

// loop count need to be doubled, due to double buffer

constexpr int32_t loopCount = TILE_NUM * BUFFER_NUM;

// tiling strategy, pipeline parallel

for (int32_t i = 0; i < loopCount; i++) {

CopyIn(i);

Compute(i);

CopyOut(i);

}

}

CopyIn函数实现

__aicore__ inline void CopyIn(int32_t progress)

{

// alloc tensor from queue memory

AscendC::LocalTensor<half> xLocal = inQueueX.AllocTensor<half>();

AscendC::LocalTensor<half> yLocal = inQueueY.AllocTensor<half>();

// copy progress_th tile from global tensor to local tensor

AscendC::DataCopy(xLocal, xGm[progress * TILE_LENGTH], TILE_LENGTH);

AscendC::DataCopy(yLocal, yGm[progress * TILE_LENGTH], TILE_LENGTH);

// enque input tensors to VECIN queue

inQueueX.EnQue(xLocal);

inQueueY.EnQue(yLocal);

}

Compute函数实现

__aicore__ inline void Compute(int32_t progress)

{

// deque input tensors from VECIN queue

AscendC::LocalTensor<half> xLocal = inQueueX.DeQue<half>();

AscendC::LocalTensor<half> yLocal = inQueueY.DeQue<half>();

AscendC::LocalTensor<half> zLocal = outQueueZ.AllocTensor<half>();

// call Add instr for computation

AscendC::Add(zLocal, xLocal, yLocal, TILE_LENGTH);

// enque the output tensor to VECOUT queue

outQueueZ.EnQue<half>(zLocal);

// free input tensors for reuse

inQueueX.FreeTensor(xLocal);

inQueueY.FreeTensor(yLocal);

}

CopyOut函数实现

__aicore__ inline void CopyOut(int32_t progress)

{

// deque output tensor from VECOUT queue

AscendC::LocalTensor<half> zLocal = outQueueZ.DeQue<half>();

// copy progress_th tile from local tensor to global tensor

AscendC::DataCopy(zGm[progress * TILE_LENGTH], zLocal, TILE_LENGTH);

// free output tensor for reuse

outQueueZ.FreeTensor(zLocal);

}

完整的add_custom.cpp文件

#include "kernel_operator.h"

constexpr int32_t TOTAL_LENGTH = 8 * 2048; // total length of data

constexpr int32_t USE_CORE_NUM = 8; // num of core used

constexpr int32_t BLOCK_LENGTH = TOTAL_LENGTH / USE_CORE_NUM; // length computed of each core

constexpr int32_t TILE_NUM = 8; // split data into 8 tiles for each core

constexpr int32_t BUFFER_NUM = 2; // tensor num for each queue

constexpr int32_t TILE_LENGTH = BLOCK_LENGTH / TILE_NUM / BUFFER_NUM; // separate to 2 parts, due to double buffer

class KernelAdd {

public:

__aicore__ inline KernelAdd() {}

__aicore__ inline void Init(GM_ADDR x, GM_ADDR y, GM_ADDR z)

{

xGm.SetGlobalBuffer((__gm__ half *)x + BLOCK_LENGTH * AscendC::GetBlockIdx(), BLOCK_LENGTH);

yGm.SetGlobalBuffer((__gm__ half *)y + BLOCK_LENGTH * AscendC::GetBlockIdx(), BLOCK_LENGTH);

zGm.SetGlobalBuffer((__gm__ half *)z + BLOCK_LENGTH * AscendC::GetBlockIdx(), BLOCK_LENGTH);

pipe.InitBuffer(inQueueX, BUFFER_NUM, TILE_LENGTH * sizeof(half));

pipe.InitBuffer(inQueueY, BUFFER_NUM, TILE_LENGTH * sizeof(half));

pipe.InitBuffer(outQueueZ, BUFFER_NUM, TILE_LENGTH * sizeof(half));

}

__aicore__ inline void Process()

{

int32_t loopCount = TILE_NUM * BUFFER_NUM;

for (int32_t i = 0; i < loopCount; i++) {

CopyIn(i);

Compute(i);

CopyOut(i);

}

}

private:

__aicore__ inline void CopyIn(int32_t progress)

{

AscendC::LocalTensor<half> xLocal = inQueueX.AllocTensor<half>();

AscendC::LocalTensor<half> yLocal = inQueueY.AllocTensor<half>();

AscendC::DataCopy(xLocal, xGm[progress * TILE_LENGTH], TILE_LENGTH);

AscendC::DataCopy(yLocal, yGm[progress * TILE_LENGTH], TILE_LENGTH);

inQueueX.EnQue(xLocal);

inQueueY.EnQue(yLocal);

}

__aicore__ inline void Compute(int32_t progress)

{

AscendC::LocalTensor<half> xLocal = inQueueX.DeQue<half>();

AscendC::LocalTensor<half> yLocal = inQueueY.DeQue<half>();

AscendC::LocalTensor<half> zLocal = outQueueZ.AllocTensor<half>();

AscendC::Add(zLocal, xLocal, yLocal, TILE_LENGTH);

outQueueZ.EnQue<half>(zLocal);

inQueueX.FreeTensor(xLocal);

inQueueY.FreeTensor(yLocal);

}

__aicore__ inline void CopyOut(int32_t progress)

{

AscendC::LocalTensor<half> zLocal = outQueueZ.DeQue<half>();

AscendC::DataCopy(zGm[progress * TILE_LENGTH], zLocal, TILE_LENGTH);

outQueueZ.FreeTensor(zLocal);

}

private:

AscendC::TPipe pipe;

AscendC::TQue<AscendC::QuePosition::VECIN, BUFFER_NUM> inQueueX, inQueueY;

AscendC::TQue<AscendC::QuePosition::VECOUT, BUFFER_NUM> outQueueZ;

AscendC::GlobalTensor<half> xGm;

AscendC::GlobalTensor<half> yGm;

AscendC::GlobalTensor<half> zGm;

};

extern "C" __global__ __aicore__ void add_custom(GM_ADDR x, GM_ADDR y, GM_ADDR z)

{

KernelAdd op;

op.Init(x, y, z);

op.Process();

}

#ifndef ASCENDC_CPU_DEBUG

void add_custom_do(uint32_t blockDim, void *stream, uint8_t *x, uint8_t *y, uint8_t *z)

{

add_custom<<<blockDim, nullptr, stream>>>(x, y, z);

}

#endif

核函数运行验证(main.cpp)

host侧应用程序框架

#include "data_utils.h"

#ifndef ASCENDC_CPU_DEBUG

#include "acl/acl.h"

extern void add_custom_do(uint32_t coreDim, void* l2ctrl, void* stream, uint8_t* x, uint8_t* y, uint8_t* z);

#else

#include "tikicpulib.h"

extern "C" __global__ __aicore__ void add_custom(GM_ADDR x, GM_ADDR y, GM_ADDR z);

#endif

int32_t main(int32_t argc, char* argv[])

{

size_t inputByteSize = 8 * 2048 * sizeof(uint16_t); // uint16_t represent half

size_t outputByteSize = 8 * 2048 * sizeof(uint16_t); // uint16_t represent half

uint32_t blockDim = 8;

#ifdef ASCENDC_CPU_DEBUG

// 用于CPU调试的调用程序

#else

// NPU侧运行算子的调用程序

#endif

return 0;

}

CPU调试

// 使用GmAlloc分配共享内存,并进行数据初始化

uint8_t* x = (uint8_t*)AscendC::GmAlloc(inputByteSize);

uint8_t* y = (uint8_t*)AscendC::GmAlloc(inputByteSize);

uint8_t* z = (uint8_t*)AscendC::GmAlloc(outputByteSize);

ReadFile("./input/input_x.bin", inputByteSize, x, inputByteSize);

ReadFile("./input/input_y.bin", inputByteSize, y, inputByteSize);

// 调用ICPU_RUN_KF调测宏,完成核函数CPU侧的调用

AscendC::SetKernelMode(KernelMode::AIV_MODE);

ICPU_RUN_KF(add_custom, blockDim, x, y, z); // use this macro for cpu debug

// 输出数据写出

WriteFile("./output/output_z.bin", z, outputByteSize);

// 调用GmFree释放申请的资源

AscendC::GmFree((void *)x);

AscendC::GmFree((void *)y);

AscendC::GmFree((void *)z);

编写NPU侧运行算子

// AscendCL初始化

CHECK_ACL(aclInit(nullptr));

// 运行管理资源申请

int32_t deviceId = 0;

CHECK_ACL(aclrtSetDevice(deviceId));

aclrtStream stream = nullptr;

CHECK_ACL(aclrtCreateStream(&stream));

// 分配Host内存

uint8_t *xHost, *yHost, *zHost;

uint8_t *xDevice, *yDevice, *zDevice;

CHECK_ACL(aclrtMallocHost((void**)(&xHost), inputByteSize));

CHECK_ACL(aclrtMallocHost((void**)(&yHost), inputByteSize));

CHECK_ACL(aclrtMallocHost((void**)(&zHost), outputByteSize));

// 分配Device内存

CHECK_ACL(aclrtMalloc((void**)&xDevice, inputByteSize, ACL_MEM_MALLOC_HUGE_FIRST));

CHECK_ACL(aclrtMalloc((void**)&yDevice, inputByteSize, ACL_MEM_MALLOC_HUGE_FIRST));

CHECK_ACL(aclrtMalloc((void**)&zDevice, outputByteSize, ACL_MEM_MALLOC_HUGE_FIRST));

// Host内存初始化

ReadFile("./input/input_x.bin", inputByteSize, xHost, inputByteSize);

ReadFile("./input/input_y.bin", inputByteSize, yHost, inputByteSize);

CHECK_ACL(aclrtMemcpy(xDevice, inputByteSize, xHost, inputByteSize, ACL_MEMCPY_HOST_TO_DEVICE));

CHECK_ACL(aclrtMemcpy(yDevice, inputByteSize, yHost, inputByteSize, ACL_MEMCPY_HOST_TO_DEVICE));

// 用内核调用符<<<>>>调用核函数完成指定的运算,add_custom_do中封装了<<<>>>调用

add_custom_do(blockDim, nullptr, stream, xDevice, yDevice, zDevice);

CHECK_ACL(aclrtSynchronizeStream(stream));

// 将Device上的运算结果拷贝回Host

CHECK_ACL(aclrtMemcpy(zHost, outputByteSize, zDevice, outputByteSize, ACL_MEMCPY_DEVICE_TO_HOST));

WriteFile("./output/output_z.bin", zHost, outputByteSize);

// 释放申请的资源

CHECK_ACL(aclrtFree(xDevice));

CHECK_ACL(aclrtFree(yDevice));

CHECK_ACL(aclrtFree(zDevice));

CHECK_ACL(aclrtFreeHost(xHost));

CHECK_ACL(aclrtFreeHost(yHost));

CHECK_ACL(aclrtFreeHost(zHost));

// AscendCL去初始化

CHECK_ACL(aclrtDestroyStream(stream));

CHECK_ACL(aclrtResetDevice(deviceId));

CHECK_ACL(aclFinalize());

完整的main.cpp文件

#include "data_utils.h"

#ifndef ASCENDC_CPU_DEBUG

#include "acl/acl.h"

extern void add_custom_do(uint32_t blockDim, void *stream, uint8_t *x, uint8_t *y, uint8_t *z);

#else

#include "tikicpulib.h"

extern "C" __global__ __aicore__ void add_custom(GM_ADDR x, GM_ADDR y, GM_ADDR z);

#endif

int32_t main(int32_t argc, char *argv[])

{

uint32_t blockDim = 8;

size_t inputByteSize = 8 * 2048 * sizeof(uint16_t);

size_t outputByteSize = 8 * 2048 * sizeof(uint16_t);

#ifdef ASCENDC_CPU_DEBUG

uint8_t *x = (uint8_t *)AscendC::GmAlloc(inputByteSize);

uint8_t *y = (uint8_t *)AscendC::GmAlloc(inputByteSize);

uint8_t *z = (uint8_t *)AscendC::GmAlloc(outputByteSize);

ReadFile("./input/input_x.bin", inputByteSize, x, inputByteSize);

ReadFile("./input/input_y.bin", inputByteSize, y, inputByteSize);

AscendC::SetKernelMode(KernelMode::AIV_MODE);

ICPU_RUN_KF(add_custom, blockDim, x, y, z); // use this macro for cpu debug

WriteFile("./output/output_z.bin", z, outputByteSize);

AscendC::GmFree((void *)x);

AscendC::GmFree((void *)y);

AscendC::GmFree((void *)z);

#else

CHECK_ACL(aclInit(nullptr));

int32_t deviceId = 0;

CHECK_ACL(aclrtSetDevice(deviceId));

aclrtStream stream = nullptr;

CHECK_ACL(aclrtCreateStream(&stream));

uint8_t *xHost, *yHost, *zHost;

uint8_t *xDevice, *yDevice, *zDevice;

CHECK_ACL(aclrtMallocHost((void **)(&xHost), inputByteSize));

CHECK_ACL(aclrtMallocHost((void **)(&yHost), inputByteSize));

CHECK_ACL(aclrtMallocHost((void **)(&zHost), outputByteSize));

CHECK_ACL(aclrtMalloc((void **)&xDevice, inputByteSize, ACL_MEM_MALLOC_HUGE_FIRST));

CHECK_ACL(aclrtMalloc((void **)&yDevice, inputByteSize, ACL_MEM_MALLOC_HUGE_FIRST));

CHECK_ACL(aclrtMalloc((void **)&zDevice, outputByteSize, ACL_MEM_MALLOC_HUGE_FIRST));

ReadFile("./input/input_x.bin", inputByteSize, xHost, inputByteSize);

ReadFile("./input/input_y.bin", inputByteSize, yHost, inputByteSize);

CHECK_ACL(aclrtMemcpy(xDevice, inputByteSize, xHost, inputByteSize, ACL_MEMCPY_HOST_TO_DEVICE));

CHECK_ACL(aclrtMemcpy(yDevice, inputByteSize, yHost, inputByteSize, ACL_MEMCPY_HOST_TO_DEVICE));

add_custom_do(blockDim, stream, xDevice, yDevice, zDevice);

CHECK_ACL(aclrtSynchronizeStream(stream));

CHECK_ACL(aclrtMemcpy(zHost, outputByteSize, zDevice, outputByteSize, ACL_MEMCPY_DEVICE_TO_HOST));

WriteFile("./output/output_z.bin", zHost, outputByteSize);

CHECK_ACL(aclrtFree(xDevice));

CHECK_ACL(aclrtFree(yDevice));

CHECK_ACL(aclrtFree(zDevice));

CHECK_ACL(aclrtFreeHost(xHost));

CHECK_ACL(aclrtFreeHost(yHost));

CHECK_ACL(aclrtFreeHost(zHost));

CHECK_ACL(aclrtDestroyStream(stream));

CHECK_ACL(aclrtResetDevice(deviceId));

CHECK_ACL(aclFinalize());

#endif

return 0;

}

完整案例体验

克隆代码仓并进入

git clone -b v0.3-8.0.0.alpha003 https://gitee.com/ascend/samples

cd samples/operator/ascendc/0_introduction/3_add_kernellaunch/AddKernelInvocationNeo

编译运行脚本

命令样例

bash run.sh -r <run_mode> -v <soc_version>

cpu调试命令

bash run.sh -r cpu -v Ascend910B3

npu调试命令

bash run.sh -r npu -v Ascend910B3

运行结果

test pass

总结

通过一个Add算子了解了一下基于Kernel直调工程的算子开发的基本流程对于后面举一反三掌握学习开拓其他算子的开发奠定了一个基础,在运行体验部分因为使用的华为云开发者空间的环境,可能权限不同,所有会有一些权限deny的报错但是不影响最后运行。这个报错在自己的开发板(如果有的话,应该是不会出现的)

鲲鹏昇腾开发者社区是面向全社会开放的“联接全球计算开发者,聚合华为+生态”的社区,内容涵盖鲲鹏、昇腾资源,帮助开发者快速获取所需的知识、经验、软件、工具、算力,支撑开发者易学、好用、成功,成为核心开发者。

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)