昇腾910A单卡与多卡部署mindie框架开启qwen2-7B大模型api

注意该命令是将npu0-7全部加载到容器中,由于910不支持容器共享(被该容器占用后,就不能被其他容器调用),所以只想加载部分卡时,修改--device=/dev/davincix部分。注:如果启动报错,可以查看/usr/local/Ascend/mindie/1.*/mindie-service/logs下的log文件。性能测试 配置环境及环境变量(好像不export也没什么影响,export有

记录下整体流程。

1、mindie环境与模型准备

建议直接拉取镜像

镜像地址:镜像仓库网 (ovaijisuan.com)![]() http://mirrors.cn-central-221.ovaijisuan.com/detail/149.html

http://mirrors.cn-central-221.ovaijisuan.com/detail/149.html

参考镜像使用说明部署环境

拉取镜像:

docker pull swr.cn-central-221.ovaijisuan.com/wh-aicc-fae/mindie:910a-ascend_23.0.0-cann_8.0.rc3-py_3.10-ubuntu_22.04-aarch64-mindie_1.0.t65启动容器:

docker run -it --name 910A_MindIE_1.0.T65_aarch64_dev0-7 --ipc=host --net=host \

--device=/dev/davinci0 \

--device=/dev/davinci1 \

--device=/dev/davinci2 \

--device=/dev/davinci3 \

--device=/dev/davinci4 \

--device=/dev/davinci5 \

--device=/dev/davinci6 \

--device=/dev/davinci7 \

--device=/dev/davinci_manager \

--device=/dev/devmm_svm \

--device=/dev/hisi_hdc \

-v /usr/local/dcmi:/usr/local/dcmi \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v /usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/common \

-v /usr/local/Ascend/driver/lib64/driver:/usr/local/Ascend/driver/lib64/driver \

-v /etc/ascend_install.info:/etc/ascend_install.info \

-v /etc/vnpu.cfg:/etc/vnpu.cfg \

-v /usr/local/Ascend/driver/version.info:/usr/local/Ascend/driver/version.info \

-v /home/aicc:/home/aicc \

swr.cn-central-221.ovaijisuan.com/wh-aicc-fae/mindie:910a-ascend_23.0.0-cann_8.0.rc3-py_3.10-ubuntu_22.04-aarch64-mindie_1.0.t65 /bin/bash

注意该命令是将npu0-7全部加载到容器中,由于910不支持容器共享(被该容器占用后,就不能被其他容器调用),所以只想加载部分卡时,修改--device=/dev/davincix部分。例如,只加载0卡

docker run -it --name 910A_MindIE_1.0.T65_aarch64_dev0 --ipc=host --net=host \

--device=/dev/davinci0 \

--device=/dev/davinci_manager \

--device=/dev/devmm_svm \

--device=/dev/hisi_hdc \

-v /usr/local/dcmi:/usr/local/dcmi \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v /usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/common \

-v /usr/local/Ascend/driver/lib64/driver:/usr/local/Ascend/driver/lib64/driver \

-v /etc/ascend_install.info:/etc/ascend_install.info \

-v /etc/vnpu.cfg:/etc/vnpu.cfg \

-v /usr/local/Ascend/driver/version.info:/usr/local/Ascend/driver/version.info \

-v /home/aicc:/home/aicc \

swr.cn-central-221.ovaijisuan.com/wh-aicc-fae/mindie:910a-ascend_23.0.0-cann_8.0.rc3-py_3.10-ubuntu_22.04-aarch64-mindie_1.0.t65 /bin/bash

进入容器后,下载模型权重

#pip install modelscope

#模型下载,cache_dir替换为本地路径

from modelscope import snapshot_download

model_dir = snapshot_download('qwen/Qwen2-7B-Instruct', cache_dir = '/home')修改权重路径中config.json中的torch_dtype为float16

cd /home/qwen/Qwen2-7B-Instruct

vim config.json

模型推理测试

torchrun --nproc_per_node 卡数

--master_port 20038

-m examples.run_pa

--model_path 权重路径

--input_text ["输入的token"]

--is_chat_model

--max_output_length 输出长度

#例

torchrun --nproc_per_node 1 \

--master_port 20038 \

-m examples.run_pa \

--model_path /home/qwen/Qwen2-7B-Instruct \

--input_text ["你好"] \

--is_chat_model \

--max_output_length 128性能测试 配置环境及环境变量(好像不export也没什么影响,export有时候还会报错,但是不影响后面的服务)

export HCCL_DETERMINISTIC=0

export LCCL_DETERMINISTIC=0

export HCCL_BUFFSIZE=120

export ATB_WORKSPACE_MEM_ALLOC_GLOBAL=1

export ASCEND_RT_VISIBLE_DEVICES="0,1,2,3,4,5,6,7"

#如果是单卡,不管加载的哪张卡

#export ASCEND_RT_VISIBLE_DEVICES="0"

cd /usr/local/Ascend/atb-models/tests/modeltest

pip install -r requirements.txt运行测试

#bash run.sh pa_fp16 performance [[input,output]] batchsize modelname model_path device_num

bash run.sh pa_fp16 full_GSM8K 5 1 qwen /home/qwen/Qwen2-7B-Instruct 1

bash run.sh pa_fp16 performance [[1024,1024]] 1 qwen /home/qwen/Qwen2-7B-Instruct 1测试结果存放在回显提示路径:

/usr/local/Ascend/atb-models/tests /tests/modeltest/result/

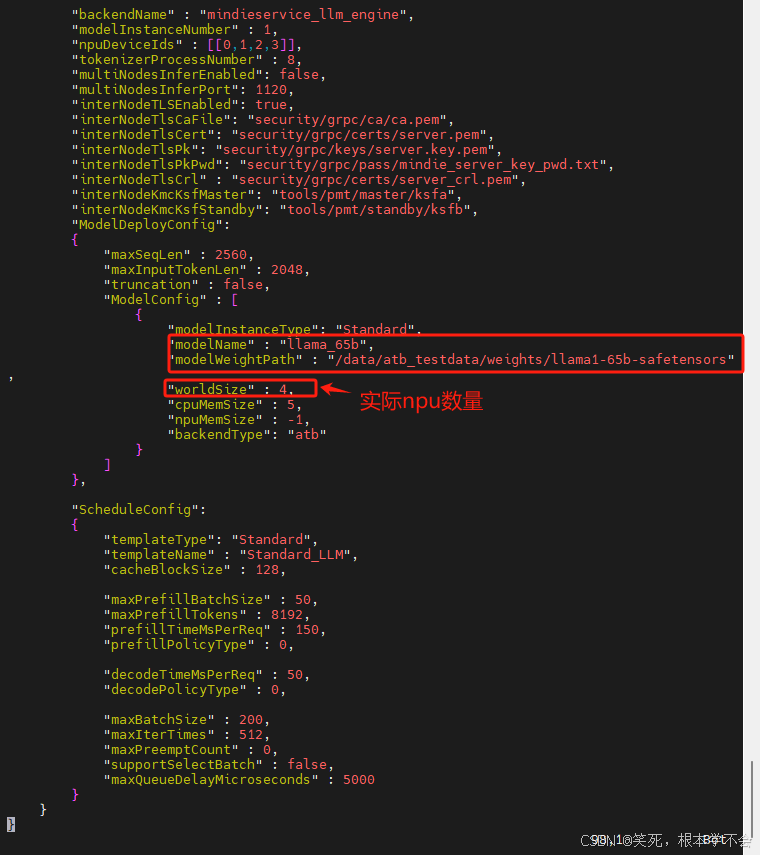

2、mindie文件配置修改

首先先配置/usr/local/Ascend/mindie/latest/mindie-service/conf/config.json文件

(文件参数详细含义见链接

配置参数说明-参数说明-快速开始-MindIE-Service开发指南-服务化集成部署-MindIE1.0.RC1开发文档-昇腾社区

)

cd /usr/local/Ascend/mindie/latest/mindie-service/

vim conf/config.json

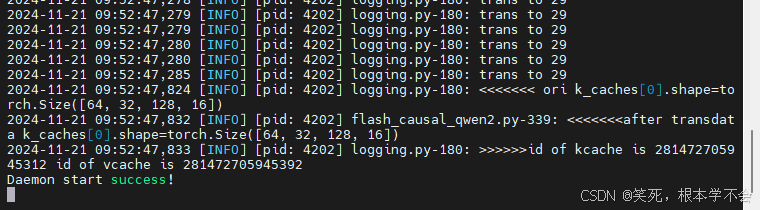

3、启动服务

bin/mindieservice_daemon

启动成功

启动成功

注:如果启动报错,可以查看/usr/local/Ascend/mindie/1.*/mindie-service/logs下的log文件。

4、接口访问

apt update

apt install curl

curl -H "Accept: application/json" -H "Content-type: application/json" -X POST -d '{

"inputs": "My name is Olivier and I",

"parameters": {

"decoder_input_details": true,

"details": true,

"do_sample": true,

"max_new_tokens": 20,

"repetition_penalty": 1.03,

"return_full_text": false,

"seed": null,

"temperature": 0.5,

"top_k": 10,

"top_p": 0.95,

"truncate": null,

"typical_p": 0.5,

"watermark": false

}

}' http://127.0.0.1:1025/generateopenai格式访问

time curl -H "Accept: application/json" -H "Content-type: application/json" -X POST -d '{

"model": "qwen",

"messages": [{

"role": "user",

"content": "我有五天假期,我想去海南玩,请给我一个攻略"

}],

"max_tokens": 512,

"presence_penalty": 1.03,

"frequency_penalty": 1.0,

"seed": null,

"temperature": 0.5,

"top_p": 0.95,

"stream": false

}' http://127.0.0.1:1025/v1/chat/completions #其中127.0.0.1以实际ip地址为准

鲲鹏昇腾开发者社区是面向全社会开放的“联接全球计算开发者,聚合华为+生态”的社区,内容涵盖鲲鹏、昇腾资源,帮助开发者快速获取所需的知识、经验、软件、工具、算力,支撑开发者易学、好用、成功,成为核心开发者。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)